Teaching machines to see like people

Researchers in Tübingen develop new approach to 3D-aware image synthesis

- 10 December 2020

- Autonomous Vision

With Generative Radiance Fields for 3D-Aware Image Synthesis (GRAF), scientists from the University of Tübingen and Max Planck Institute for Intelligent Systems have vastly improved the quality of machine-generated 3D images of single objects.

In the field of computer vision, a key challenge lies in teaching machines to “see” and draw conclusions about complex 3D scenes, just as human beings do. The ultimate aim is enabling technologies in realms such as autonomous driving and virtual reality to operate safely and reliably. While science is still a long way from achieving this, researchers at the University of Tübingen and the Max Planck Institute for Intelligent Systems (MPI-IS) have taken the field one step further with GRAF: Generative Radiance Fields for 3D-Aware Image Synthesis. They present their work this week at the Thirty-fourth Conference on Neural Information Processing Systems (NeurIPS 2020). One of the world’s leading machine learning conferences, NeurIPS 2020 is being held virtually until December 12.

“Human beings are capable of looking at a 2D image and imagining exactly what it looks like from different angles and viewpoints in 3D,” said Katja Schwarz, the paper’s lead author.

“But they can do even more: they can also imagine completely new scenes because they understand the underlying concepts required to create them. This calls for a sophisticated ability to reason in 3D that machines simply don’t have yet. Machines can already generate new 2D images really well, but science is still working on enabling them to reason better in 3D and learn abstract three-dimensional concepts. With GRAF, we offer an approach that vastly improves 3D-aware image synthesis for single objects and takes an important step in this direction.”

Schwarz is currently a PhD student in the Autonomous Vision Group, which is split between the University of Tübingen and the MPI-IS. Her co-authors were fellow researchers Yiyi Liao and Michael Niemeyer, as well as Andreas Geiger, who is group leader.

While methods for 3D-aware image synthesis already exist, they often require 3D training data or multiple images from the same scene with known camera poses. This information can be difficult to collect in real-world situations. An autonomous vehicle, for instance, must be able to operate safely when pedestrians suddenly cross the road or other unexpected obstacles appear. For obvious reasons, such scenes cannot be pre-recorded in real-life, making simulation an important part of the training pipeline.

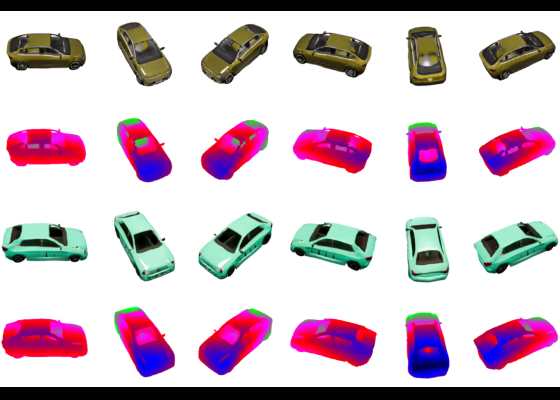

In response to this challenge, the scientists aimed to create a 3D-aware model that learns only from 2D images without viewpoint information. This is a challenging task, and existing approaches either produce low-image resolution or inconsistent object depictions when the viewpoint changes. With GRAF, the scientists set out to create a model that scales to high image resolution while maintaining consistent object depictions. In the long term, such models could generate realistic simulations for training robots or autonomous vehicles.

To find out how a generative model could be made aware of the world’s 3D nature solely on the basis of 2D images, the researchers incorporated a virtual camera into their model. They then controlled its position so it could view the objects in the images from different angles. The 3D objects are modeled in a memory-efficient way that uses specific representations known as radiance fields. This is key for scaling the method to high image resolution. For a given camera viewpoint, every 3D object can then be rendered to a 2D image. By comparing the generated renderings to real images, the model learns to generate 3D-consistent images across camera viewpoints.

“We analyzed our model on several synthetic and real-world datasets, and we found that our approach with radiance fields scales well to high-resolution while maintaining consistency across different camera viewpoints,” said Schwarz. The scientists not only tested GRAF on images of objects like cars and chairs, but also on natural images of people, birds, and cats, with very good results. In a next step, they plan to extend their approach beyond single objects to even more complex real-world scenes.

More information about the GRAF project can be found at https://autonomousvision.github.io/graf/.

Video

NeurIPS

3D-aware image synthesis

Computer Vision

University of Tübingen

Max Planck Institute for Intelligent Systems